Video Streaming Protocols

What Is a Streaming Protocol?

A streaming protocol is a set of rules and methods that define how audio, video, or other media files are delivered over the internet in real time.

Instead of downloading the whole file before playing (which takes a lot of time), streaming protocols break content into small chunks and send them in sequence. This way, playback begins almost instantly while the rest of the chunks continue to load.

Remember: The purpose of streaming protocols is to ensure smooth delivery of media, adapting to different devices, networks, and bandwidth conditions.

In short, streaming protocols are like “delivery system rules” that ensure videos, music, or live broadcasts reach users quickly, smoothly, and in the best possible quality.

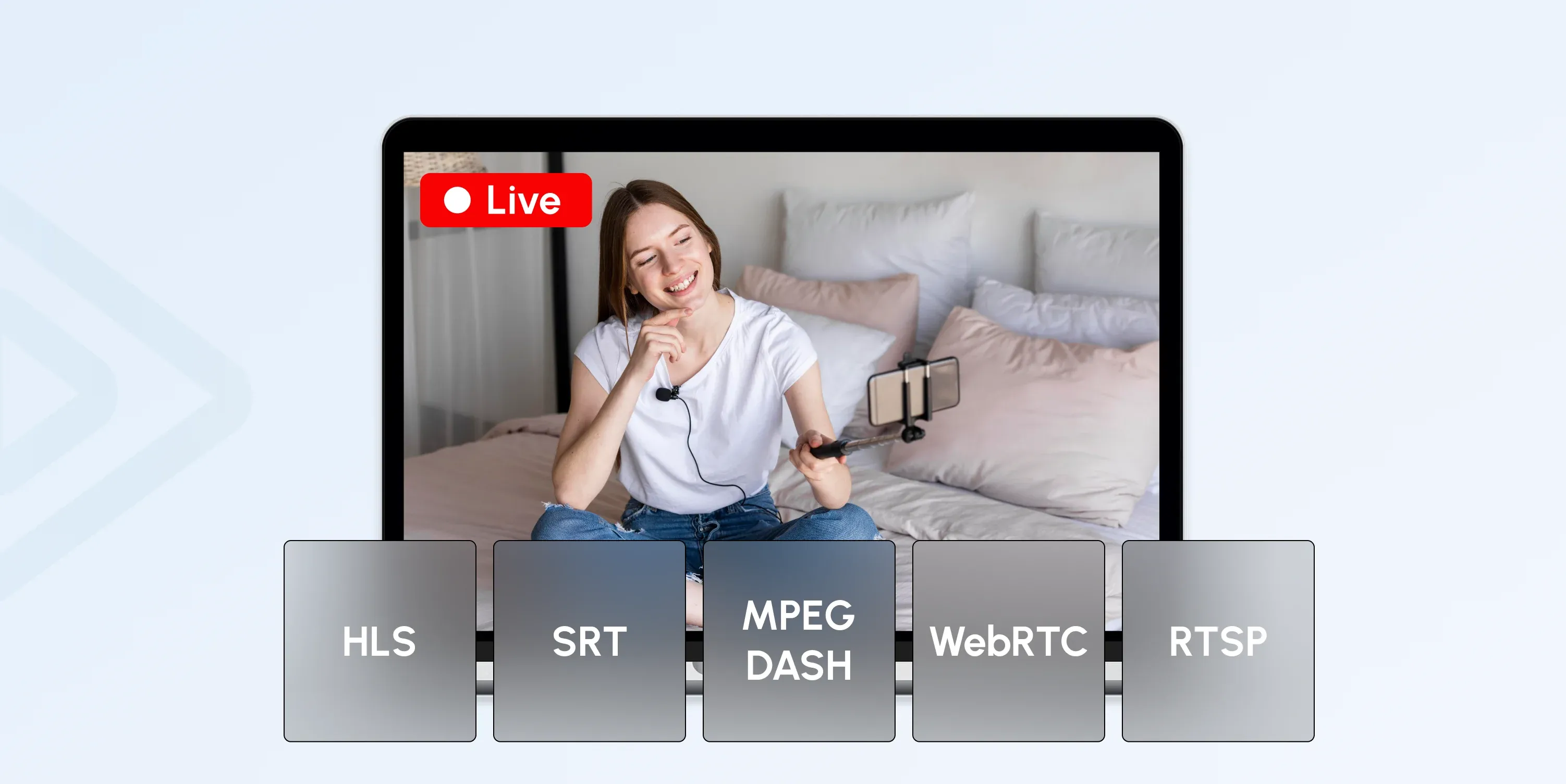

Types of Video Streaming Protocols

1. HTTP-Based Adaptive Streaming Protocols (the most common today)

These protocols deliver video over standard HTTP by splitting it into small segments and allowing the player to switch between different quality levels based on network speed. They are optimized for scalability and compatibility with web infrastructures (CDNs, caches, browsers).

Examples:

- HLS (HTTP Live Streaming) – Apple’s widely used protocol.

- MPEG-DASH – an open standard supported across platforms.

The Purpose:

These protocols made large-scale video delivery possible without specialized servers, since they use ordinary HTTP infrastructure (CDNs, caches, web servers). They power most modern OTT platforms, VOD libraries, and live-streaming services.

2. Real-Time Protocols (best for low-latency or live delivery)

These protocols focus on sending continuous audio and video streams with minimal delay, often under a second, making them ideal for interactive or time-sensitive use cases. They rely on direct transport methods (often UDP) instead of segmenting content like HTTP-based protocols.

Examples:

- RTMP (Real-Time Messaging Protocol) – Adobe’s legacy protocol; still used for ingesting live streams into CDNs, often converted to HLS/DASH for playback.

- RTSP (Real-Time Streaming Protocol) – used in IP cameras and surveillance systems.

- SRT (Secure Reliable Transport) – newer, open-source, designed for reliable, low-latency contribution feeds.

- RIST (Reliable Internet Stream Transport) – similar to SRT, aimed at professional broadcast workflows.

- WebRTC (Web Real-Time Communication) – designed for real-time video chats, conferencing, and ultra-low-latency streaming.

Purpose:

Real-time protocols minimized delay so that audio and video feel “live” and interactive. They are designed for situations where every second counts (e.g., video calls, live auctions, online gaming, or professional broadcast feeds). The priority is speed and immediacy, ensuring viewers and participants can engage in real time rather than waiting for buffered playback.

3. Legacy Protocols

Older protocols, which were once widely used for online video delivery. These relied on custom players (e.g., Flash, Windows Media Player) and proprietary servers, making them less efficient and incompatible with today’s web ecosystem.

Now, legacy protocols are mainly replaced by HTTP-based adaptive streaming.

Examples:

- MMS (Multimedia Messaging Service) – obsolete, once used in Windows Media Player.

- HDS (HTTP Dynamic Streaming) – Adobe’s HTTP-based approach, now deprecated.

- P2P Streaming Protocols – decentralized systems (e.g., BitTorrent-based live streaming).

Purpose:

Legacy protocols introduced the very first methods of online video delivery, way before modern web standards existed. Although limited by plugins and proprietary players, their purpose was to prove that continuous video could be streamed over the internet rather than downloaded.

Why are Legacy Protocols Outdated?

Although legacy protocols are now outdated, they laid the foundation for today’s streaming technologies. So, why are these protocols no longer good enough?

Simply because they were built for an earlier internet environment and don’t meet the needs of modern streaming, let’s break it down.

1. Limited Device Support

Most modern browsers and devices have dropped support for legacy protocols. For example, Flash (needed for RTMP) is no longer supported.

2. High Latency and Inefficiency

Legacy protocols were not optimized for adaptive bitrate streaming. They often deliver fixed-quality streams with higher delays, which doesn’t suit today’s demand for low-latency live events and smooth playback.

3. Poor Scalability

These protocols were designed before content delivery networks (CDNs) became the standard. They don’t scale efficiently to millions of viewers across global infrastructures.

4. Security Limitations

Legacy protocols lack modern encryption and DRM integration, making them less secure compared to HTTP-based adaptive streaming.

5. Compatibility with Modern Infrastructure

The industry has shifted to HTTP-based protocols (HLS, MPEG-DASH, CMAF) that work seamlessly with standard web servers, caching layers, and CDNs. Legacy protocols require specialized servers, which increases cost and complexity.

6. End of Vendor Support

Vendors and platforms have discontinued support for legacy protocols, meaning updates, bug fixes, and optimizations are no longer provided.

Therefore, legacy protocols are outdated because they can’t keep up with modern streaming needs—namely, adaptive, secure, scalable, and low-latency delivery across all devices.

Comparison of Major Video Streaming Protocols

| Protocol | Latency | Use Case | Adoption | Support |

|---|---|---|---|---|

| HLS (HTTP Live Streaming) | 6–30 sec (standard) / 2–5 sec (with Low-Latency HLS) | On-demand & live streaming at scale | Extremely high – default for iOS/tvOS, widely used across web and OTT | Native in Apple devices, browsers via Media Source Extensions (MSE), most players/CDNs |

| MPEG-DASH | 6–30 sec (standard) / ~3–6 sec (low-latency mode) | On-demand & live streaming, adaptive bitrate | High in enterprise/broadcast, not supported on iOS Safari natively | Broad support in Android, browsers (Chrome, Firefox, Edge), smart TVs, and set-top boxes |

| RTMP | 2–5 sec | Contribution (ingest) to streaming platforms (Twitch, YouTube Live, FB Live) | Legacy but still dominant for ingest | Supported by OBS, encoders, but playback needs conversion to HLS/DASH |

| RTSP | 1–5 sec | Surveillance cameras, security feeds, and video conferencing | Moderate – niche in CCTV/IP cameras | Native in many IP cameras, some mobile/desktop players |

| SRT (Secure Reliable Transport) | <1 sec to 3 sec (depending on network) | Professional broadcast, remote production, contribution feeds | Rapidly growing in broadcast & live events | Open-source, supported by encoders, broadcasters, and CDNs |

| RIST (Reliable Internet Stream Transport) | <1 sec to 3 sec | Professional broadcast, contribution feeds | Growing in niche broadcast workflows | Supported by vendors targeting broadcasters |

| WebRTC | Sub-500 ms (ultra-low latency) | Real-time communication, video calls, interactive streaming (betting, auctions, gaming) | Very high for conferencing, rising for interactive OTT | Native in browsers (Chrome, Safari, Firefox, Edge), mobile SDKs, and many platforms |

| Smooth Streaming (Microsoft) | 6–30 sec | Older adaptive streaming for the Windows ecosystem | Declining – replaced mainly by HLS/DASH | Legacy support in older Microsoft platforms |

| HDS (Adobe HTTP Dynamic Streaming) | 6–30 sec | Older Flash-based adaptive streaming | Obsolete | No modern support |

Which Protocol Should You Use?

- For general OTT services (e.g., Netflix, Disney+, YouTube), the primary standards are HLS and MPEG-DASH.

- For live contribution, RTMP is still used for ingest, while SRT/ RIST are taking over in professional workflows.

- For real-time and ultra-low latency, WebRTC is the go-to.

- Legacy protocols, such as Smooth Streaming and HDS, are being effectively phased out.

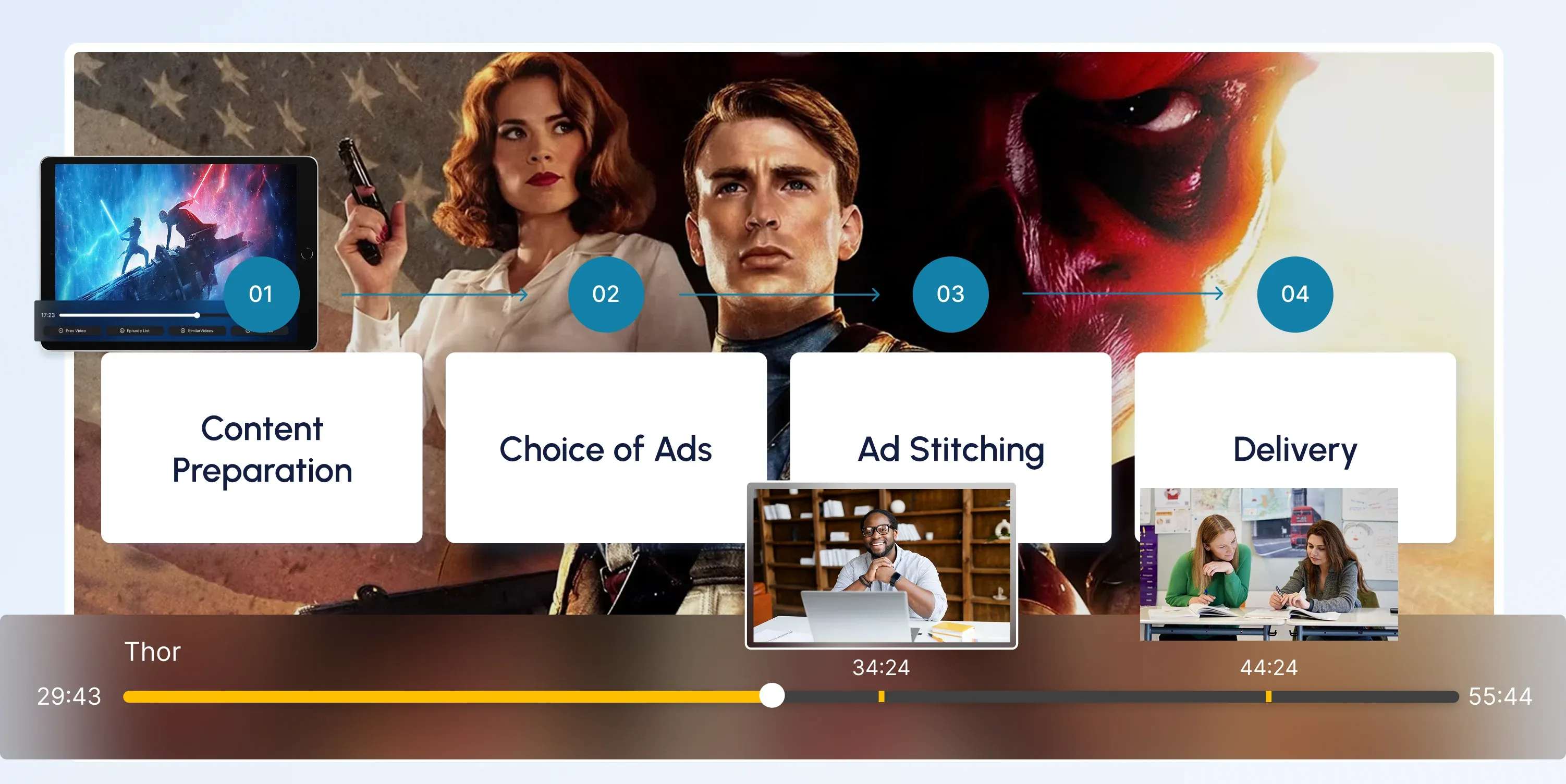

How Do Video Streaming Protocols Work?

The main purpose of video streaming protocols is to set the rules for smooth video delivery from the server to the viewer’s device.

Here’s the whole process step-by-step:

Step 1: Preparation

The video is encoded into a compressed format and often created in multiple quality levels.

Step 2: Breaking into Pieces

Instead of sending the whole file, the video is split into small chunks (segments or packets).

Step 3: Delivery

The protocol decides how those chunks move across the internet:

- HTTP-based protocols (HLS, MPEG-DASH) send them as small files over regular web servers.

- Real-time protocols (WebRTC) send them instantly, frame by frame, with very low delay.

Step 4: Manifest or Playlist

Adaptive protocols include a “map” file that tells the player which chunks exist and at what quality.

Step 5: Player Side

The video player fetches chunks, buffers a few seconds, and plays them in order. If bandwidth changes, it can switch to a higher or lower quality chunk.

Step 6: Playback

The player decodes the chunks and shows smooth video and audio to the user.

Simply put, video streaming protocols define how video chunks are packaged, transported, and switched in quality so viewers get a smooth stream on any device.

The Most Popular Video Streaming Protocols: Quick Breakdown

1. HLS (HTTP Live Streaming)

Created by: Apple

It is the most universal, easy-to-scale protocol in use.

How it works: Splits video into small 2–10 second chunks, delivered over regular HTTP. A playlist file (.m3u8) tells the player which chunks to fetch.

Strengths:

- Works on almost all devices (iOS, Android, browsers, TVs).

- Supports Adaptive Bitrate Streaming (automatically changes quality).

- Easy to scale with CDNs (uses standard web servers).

Weakness:

- Slightly higher latency (usually 6–30 seconds).

Best for: Live and on-demand streaming at scale (YouTube Live, news, sports).

2. MPEG-DASH (Dynamic Adaptive Streaming over HTTP)

Created by: MPEG standards group

It is powerful and codec-flexible, great for global services.

How it works: Similar to HLS but not tied to Apple. Uses a manifest (.mpd) file and supports multiple codecs (H.264, H.265, VP9, AV1).

Strengths:

- Codec-agnostic (works with many video formats).

- Widely used on smart TVs and set-top boxes.

- Powerful for large international platforms.

Weakness:

- Not natively supported on iOS Safari (needs extra tools).

Best for: Global streaming platforms (Netflix, Amazon Prime).

3. CMAF (Common Media Application Format)

Created by: Apple + Microsoft

It is a unified version of HLS and DASH with reduced costs.

How it works: A standardized way of packaging video chunks so the same files can be used for both HLS and MPEG-DASH.

Strengths:

- Reduces storage and bandwidth costs (one file works everywhere).

- Enables low-latency streaming when combined with LL-HLS or LL-DASH.

Weakness:

- Still requires adoption by players and encoders.

Best for: Services that deliver to many devices but want efficiency.

4. RTMP (Real-Time Messaging Protocol)

Created by: Adobe (for Flash Player, now retired)

It is legacy, now mainly used for ingest.

How it works: Sends video as a continuous stream over a persistent TCP connection.

Strengths:

- Low latency.

- Still widely used for contribution (sending live video from an encoder to a streaming server like YouTube or Twitch).

Weakness:

- Outdated for playback (Flash is dead, no browser support).

Best for: Ingesting live video into a platform (before it’s converted to HLS/DASH).

5. WebRTC (Web Real-Time Communication)

Created by: Google / Open Web standard

It is a go-to for ultra-low latency and real-time interactivity.

How it works: Sends video peer-to-peer (or via a server) with extremely low delay, often under 500ms.

Strengths:

- Ultra-low latency.

- Built into browsers without plugins.

- Great for interactivity (video calls, gaming, live auctions).

Weakness:

- Harder to scale to millions of viewers compared to HLS/DASH.

Best for: Real-time communication (Zoom, Google Meet, interactive live streams).

Streaming Protocols vs Codecs vs Container Formats

1. Video Streaming Protocols

Streaming protocols are the “delivery rules” — the guidelines that determine how video data travels from the server to the player.

Examples: HLS, MPEG-DASH, WebRTC, RTMP.

Role: Decide how video is transmitted, chunked, and played back across the internet.

Analogy: Like the roads and traffic rules that cars follow.

2. Codecs

Codecs are the “language of compression” — how raw video/audio is encoded and decoded.

Examples: H.264/AVC, H.265/HEVC, VP9, AV1, AAC.

Role: Shrink file sizes while keeping good quality; the player must support the codec to play the stream.

Analogy: Like the language spoken inside the car, both sender and receiver must understand it.

3. Container Formats

Container formats are the “packaging” — how video, audio, subtitles, and metadata are bundled together.

Examples: MP4, MKV, TS, fMP4.

Role: Ensure all parts of the stream stay together in one file or segment.

Analogy: Like the car itself, carrying passengers (video, audio, subtitles) down the road.

In Conclusion…

- Protocol = How the video travels (HLS, DASH).

- Codec = How the video is compressed (H.264, AV1).

- Container = How video + audio + metadata are packaged (MP4, MKV).

Frequently Asked Questions

Content Writer

Anush Sargsyan is a content writer specializing in B2B content about OTT streaming technologies and digital media innovation. She creates informative, engaging content on video delivery, OTT monetization, and modern media technologies. The goal is to help readers easily understand complex ideas. Her writing is the bridge between technical detail and practical insight, making advanced concepts accessible for both industry professionals and general audiences.

Related terms

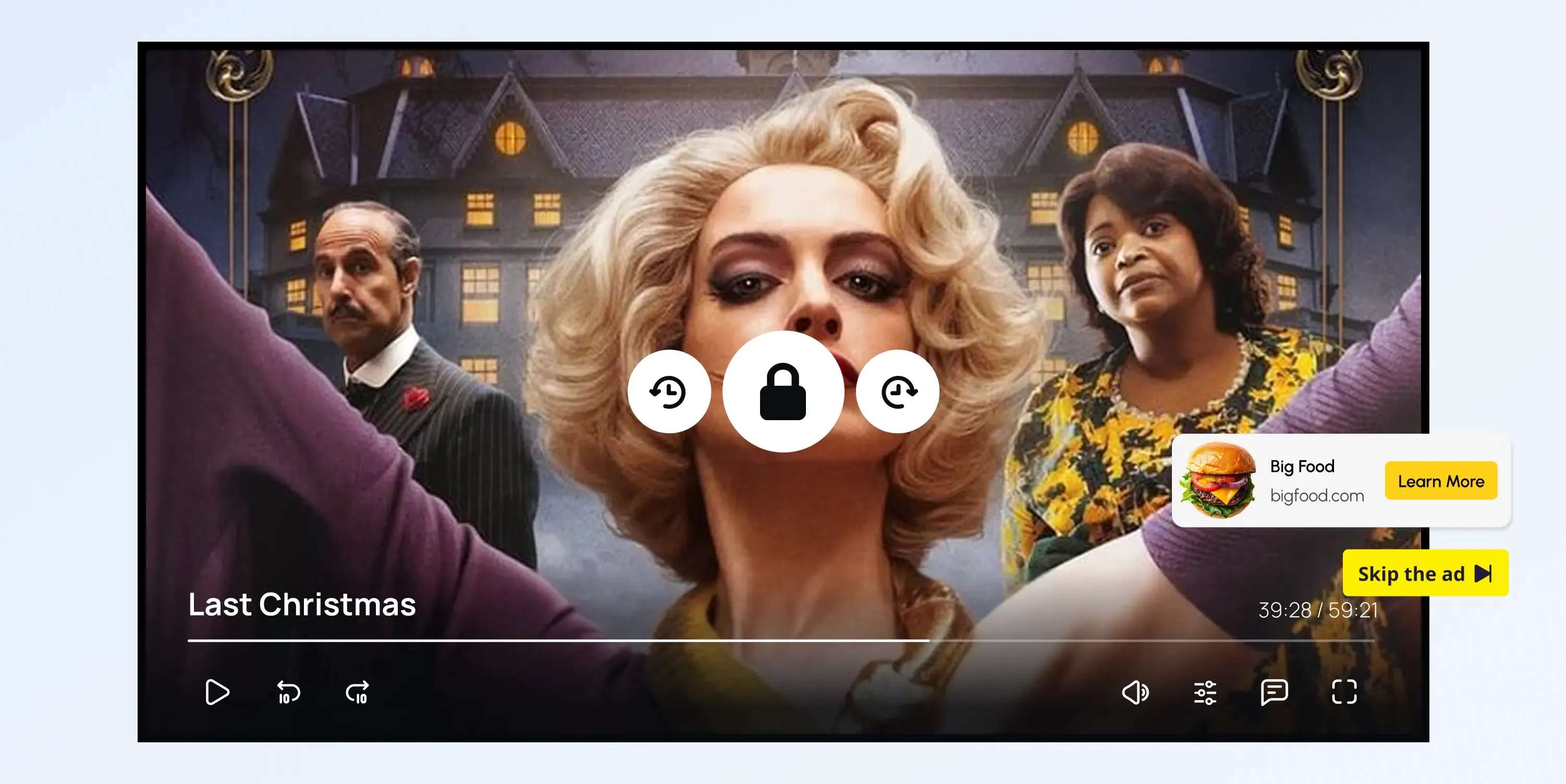

AVOD (Advertising-Based Video on Demand)

Explore how AVOD works, its monetization model, and why it's popular for free streaming platforms. Read the full definition on inorain.com glossary.

CSAI (Client-Side Ad Insertion)

Learn how CSAI delivers ads through the video player, enabling targeting, tracking, and monetization.