What is Video Encoding? A Guide for Streaming Success

Have you ever wondered how videos make it onto DVDs and Blu-ray discs? It all comes down to video encoding—the technology behind both physical media and online streaming. Video encoders help content creators and OTT platforms deliver high-quality content across multiple devices worldwide.

In this guide, we’ll talk about video encoding, how it works, key formats, and its role in streaming.

What is Video Encoding?

Video encoding is the process of converting raw footage into a digital format. This footage contains everything—from the colors, details, lighting, and more—that is captured by the camera’s sensor.

For a video recorded at 30 frames per second (FPS), you would have 30 still photos per second of footage. That’s 1,800 images per minute of video! As a result, the video’s file size can be quite big, making it harder to store, stream, send, and receive. Video encoding solves this problem by compressing the file into smaller sizes that are easier to distribute.

The video encoding process

Encoding is crucial for streaming, as it transforms analog signals into digital data, which in turn, optimizes video files for online distribution and storage. This allows for seamless playback across various platforms, preventing buffering and bandwidth issues.

Most mobile devices already have video encoders embedded into apps or the phone’s camera. In these cases, the priority is transporting the video from one point to the other. This is useful for simple broadcasts.

For more professional broadcasts, hardware encoders and computer software like Open Broadcaster Studio (OBS) are required. These encoders let content distributors change and specify the settings of their files (like which codecs they’d like to use). Some encoders also offer features like video mixing and watermarking.

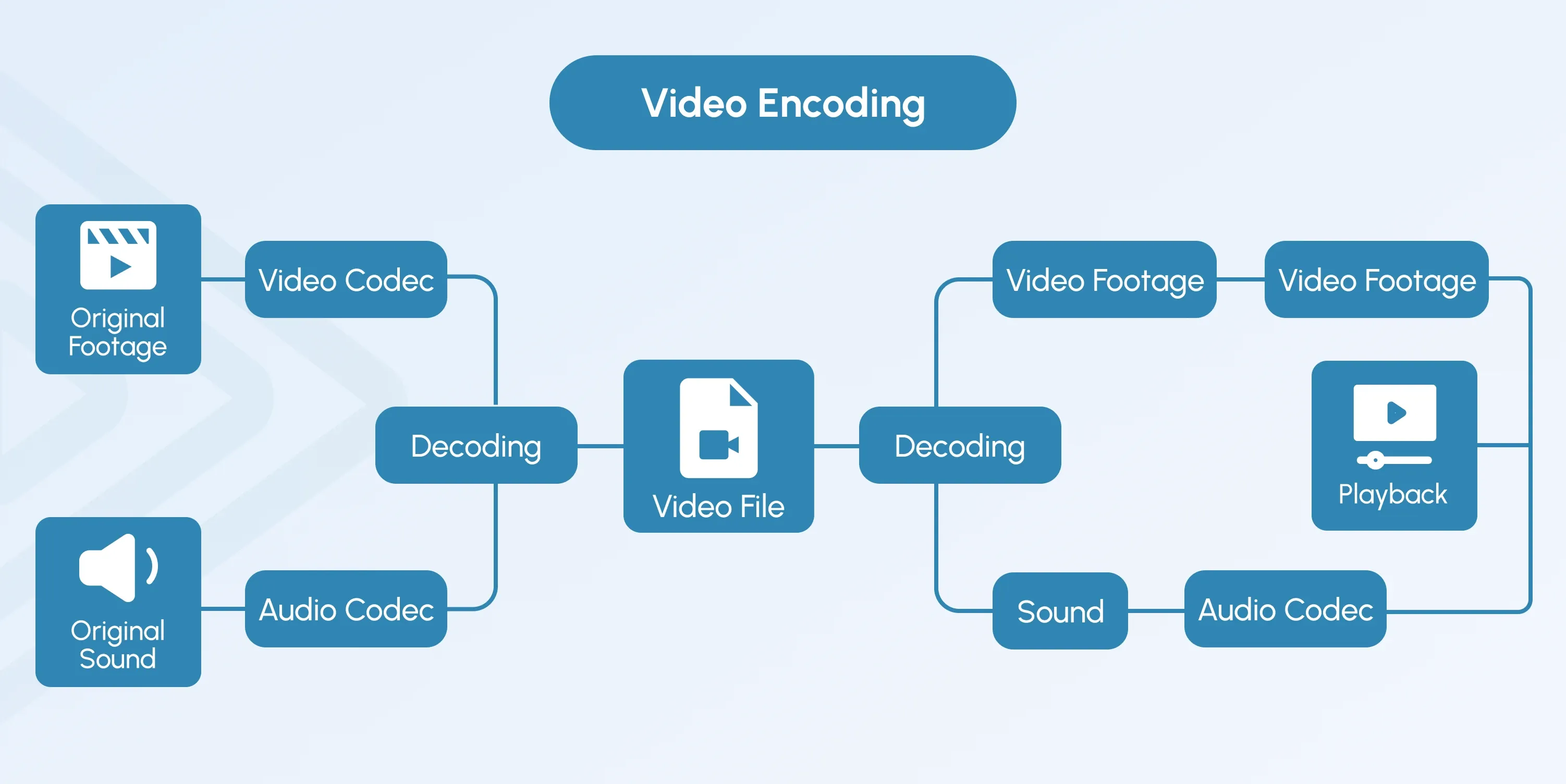

How Video Encoding Works

Video encoding works by compressing large files without compromising the quality. This can be done by adjusting the file size, changing the quality, or removing unnecessary data. The steps are as follows:

Step 1: Frame Analysis

The encoder scans video frames to identify important visual elements based on user-defined settings.

Step 2: Compression with Codecs

Encoders use algorithms (codecs) to condense the size of digital video files. They compare each frame to the previous frame and either remove redundant information or reference identical frames instead of storing them separately.

Step 3: Packaging and Transcoding

Once the video is encoded, it may not be compatible with every device or network condition. That’s where video transcoding comes in. It takes the encoded video and generates different versions optimized for various devices, bandwidths, and platforms. Video transcoding is essential for adaptive bitrate streaming (ABR), where viewers receive the best quality video their internet connection can support.

Step 4: Packaging Into Containers

The encoded and transcoded files are then packaged into a container format (e.g., MP4, MOV) along with audio, subtitles, and metadata.

Different containers are compatible with different codecs and video players. Typically, the names of the file formats correspond with the types of containers that they use. For example, the MOV encoding file format simply means that it’s using a MOV container.

Step 5: Video Decoding

A video decoder decompresses the file, allowing it to play smoothly on streaming platforms.

On mobile devices, encoding usually occurs as the content is captured. When it comes to live streaming encoding, however, broadcasters have to encode the stream via the Real-Time Messaging Protocol (RTMP), Secure Reliable Transport (SRT), or another video streaming protocol. These protocols contain a set of rules that govern how data is transmitted over a network. They handle tasks like data segmentation, error correction, buffering, and synchronization.

The Difference Between Video Encoding and Decoding

The main difference between video encoding and decoding is how data is processed. In fact, decoding is the exact opposite of encoding in that it unpacks the data that has been compressed. This is necessary to display the video properly after transmission because it prevents interruptions, provides smooth playback, and maintains the quality of the video.

In online streaming, the video player acts as the decoder, processing the encoded video for display. Devices like set-top boxes, PlayStation, and Xbox also function as video decoders.

For a smooth streaming experience, video encoders and decoders must work together, ensuring that compressed video files can be efficiently transmitted and properly played back across different devices.

What are Popular Video Encoding Formats?

The oldest and most popular video encoding format is H.264 or AVC (Advanced Video Coding), because almost every device supports it. Developed by the International Telecommunication Union (ITU-T) and the ISO/IEC Moving Picture Experts Group (MPEG), it’s the standard for online streaming, Blu-ray discs, and cable broadcasting.

Other common video encoding formats include:

VP9

Developed by Google and released in 2013, VP9 is an open-source, royalty-free alternative to H.264 (AVC). It’s widely used for web streaming and is supported by most modern browsers and devices. According to Google, more than 90% of WebRTC videos encoded in Chrome use VP9 or its predecessor, VP8.

That said, although VP9 offers better compression efficiency than AVC, its adoption outside of web-based platforms has been limited.

HEVC (H.265)

H.265, also known as High-Efficiency Video Coding (HEVC), was created for better file compression while maintaining the same video quality. It uses less bandwidth and supports high-resolution streaming.

Despite being designed as the better successor to H.264, H.265 isn’t used often. This is partially due to confusion around its royalties and what developers will have to pay to use it. With H.265, you can deliver 4K, HDR, and even 8K streaming with reduced bandwidth usage.

AV1

Major tech players Amazon, Netflix, Cisco, Microsoft, Google, and Mozilla, under the consortium Alliance for Open Media, created the video encoding format AV1 in light of the royalty confusion around HEVC. It offers better compression efficiency than both H.264 and HEVC, making it ideal for high-resolution streaming. This codec is royalty-free and open source but it’s computationally intensive, requiring more processing power, which makes it more expensive to use.

VVC (H.266)

Versatile Video Coding (H.266), developed in 2020, was designed to replace H.264 and HEVC, offering better compression than HEVC while maintaining high-quality video. However, patent licensing concerns have slowed adoption, making this video encoding format less common in the industry.

Key Components of Video Encoding

Video encoding involves multiple compression techniques and encoding tools, each playing a crucial role in optimizing video quality and file size. Here’s a breakdown of the essential components, starting with the compression types:

| Compression Type | Description | Example Usage |

|---|---|---|

| Lossless Compression | Reduces file size while maintaining data integrity, allowing the original file to be fully restored when decoded. | ZIP files, PNG images, FLAC audio |

| Lossy Compression | Removes redundant data to minimize file size while maintaining acceptable quality. | Streaming videos, JPEG images, MP3 audio |

| Temporal Compression | Breaks video frames into a keyframe and delta frames, storing only changes between frames to reduce bitrate. | Video streaming, MPEG and H.264 video codecs |

| Spatial Compression | Eliminates duplicate pixels within keyframes by encoding only differences between pixel groups. | Video encoding with static backgrounds, compression in news broadcasts |

Encoding Hardware vs. Software

Encoding can happen within a browser or mobile app, on an IP camera, using software, or via a stand-alone appliance. Dedicated software and hardware encoders make the video encoding process more efficient by offering more advanced configurations and precise control over the compression parameters. You can optimize the video quality, bitrate, resolution, and other aspects to meet your specific requirements and deliver the best possible viewing experience.

Over the years, online software has become more popular than dedicated hardware because it is easier to access and more cost-effective. Common software options for live streaming content include vMix, Wirecast, and the free-to-use OBS Studio. Video encoding hardware like Videon, AJA, Matrox, and Osprey offer purpose-built solutions for professional live broadcasting. Plenty of producers also use a mix of software and hardware encoding solutions, depending on their unique workflows.

Benefits of Efficient Video Encoding

With efficient video encoding, you can:

Easily Share Content

Smaller, compressed video files are easier to send via email, messaging apps, or cloud-based collaboration tools, which often have file size limitations.

Maximize Storage Space (and Save Money)

Smaller file sizes also mean you can make the most of your external hard drive or online cloud storage, which usually charges more money based on how much space your content takes up.

Improve the Viewer Experience

Video encoding minimizes the bandwidth needed for high-quality streams, reducing latency and improving the viewing experience—especially during a live stream. No more buffering!

Scale Your Content

Video encoding ensures compatibility across multiple devices, various platforms, and locations. This is important for content creators who want to re

ach more viewers.

Improve Streaming Security

Enterprise streams, for example, often contain proprietary information that needs to be kept safe. Encoders that support digital rights management (DRM) and encryption protocols add a layer of protection to your video content.

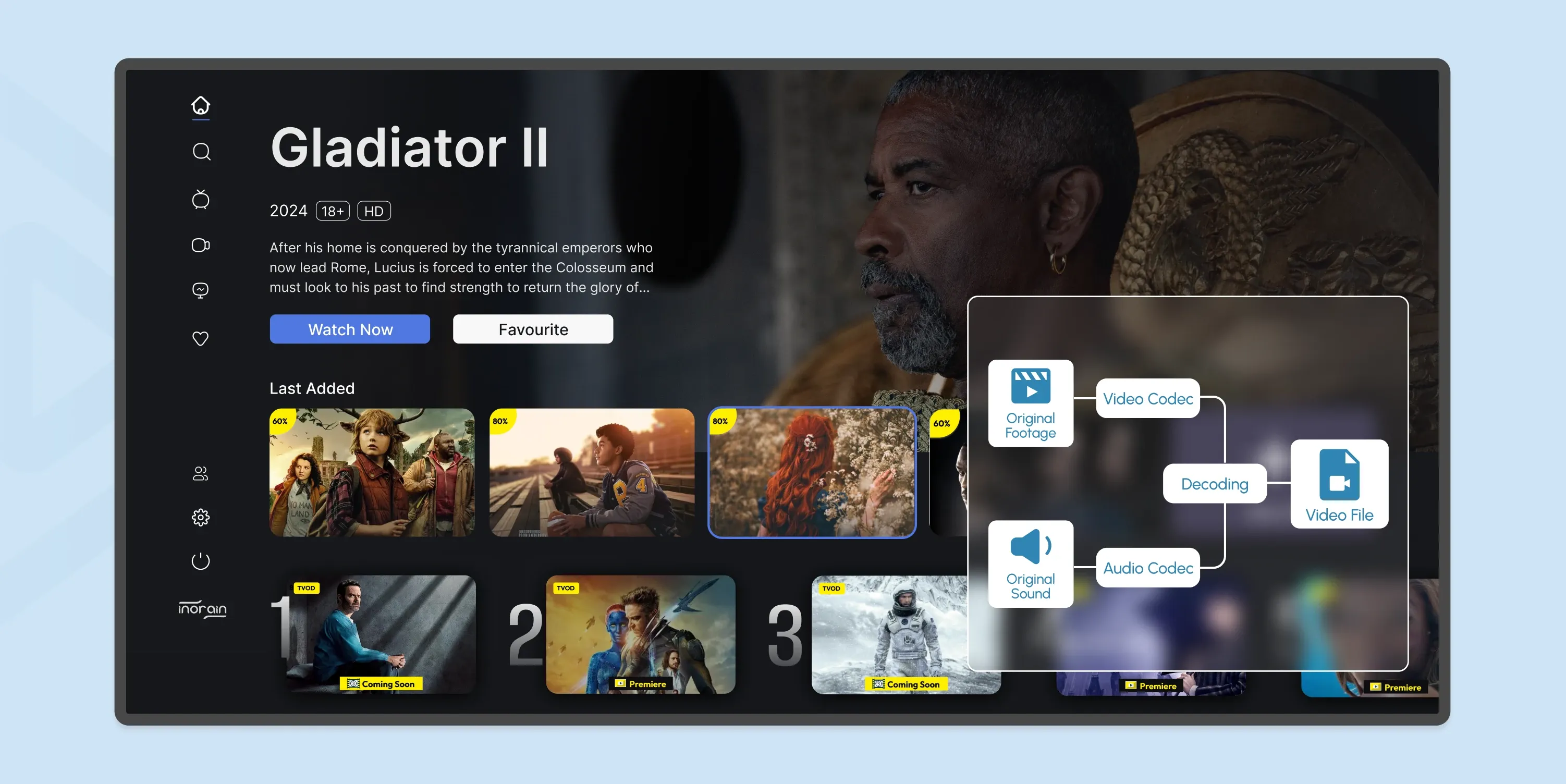

How Does inoRain Optimize Video Encoding for OTT and P2P Streaming?

inoRain enhances video encoding for Over-The-Top (OTT) and Peer-to-Peer (P2P) streaming through several key strategies:

inoRain enhances video encoding for Over-The-Top (OTT) and Peer-to-Peer (P2P) streaming through several key strategies:

- By implementing adaptive bitrate streaming (ABR). This ensures that video quality dynamically adjusts to viewers’ internet connections.

- Our flexible transcoding solutions enable content to be streamed in the highest possible quality, supporting resolutions up to 4K and 8K.

- Recognizing the diversity of viewer preferences, our platform is compatible with over 10 devices, including smartphones, tablets, TV boxes, PCs, smart TVs, and web browsers.

- By leveraging Content Delivery Network (CDN) integration, we accelerate content distribution, reducing latency and enhancing the viewer experience.

- We also provide a revenue-focused analytics dashboard, allowing content providers to monitor key performance metrics and make informed decisions.

Whether you’re building a branded OTT platform or a custom OTT app, inoRain equips you with powerful tools to optimize video encoding, enhance content delivery, and maximize monetization opportunities.

The Future of Video Encoding

The future of video encoding is facing tremendous change, with technology, user preferences, brand engagement, and other factors redefining the parameters. Some of the trends informing this transition include:

How Video is Viewed

In recent years, we’ve gone from mainly watching videos on TVs, monitors, and computer/laptop screens to consuming content on mobile devices, digital billboards, and consoles. Now it’s changing again as we move to head-mounted displays (like VR headsets), autonomous vehicles (Tesla cars), and other smart devices (like Apple watches).

How Video is Evaluated

As holographic renditions and augmented reality (AR) become more commonplace, there will be a need to blend captured and synthetic content seamlessly. This will require new codecs capable of handling diverse needs quickly and qualitatively.

How AI is Integrated

With the rise of artificial intelligence (AI) and machine learning (ML), the way video content is delivered and experienced is also changing. These technologies can adjust video quality and bitrate dynamically based on viewer behavior and environmental variables. They can also facilitate more accurate content recommendations based on viewer preferences.

Hardware Encoders Making a Comeback

Video Processing Units (VPUs) utilizing ASICs (Application-Specific Integrated Circuits) are becoming increasingly popular again in data centers. VPUs can handle higher throughput of video data with lower power consumption compared to software-based solutions. This is in response to an influx of video-based content globally as companies realize the marketing benefits.

Low Latency Streaming

In domains where real-time engagement is essential (such as live broadcasts, online gaming, or interactive webinars), low latency streaming is becoming increasingly important. For instance, Twitch and YouTube Live are refining their streaming technologies to offer latency as low as a few seconds. Low latency improves the interaction between content and its viewers, allowing instantaneous feedback through comments and reactions.

Technological advancements such as WebRTC (Web Real-Time Communication) are instrumental in achieving these low latency streams, enabling peer-to-peer communications directly in the web browser without the need for complex server-side processing.

Frequently Asked Questions

Co-founder / CTO

Armen is the CTO and Co-Founder of inoRain OTT and Co-Founder of HotelSmarters, specializing in advanced streaming technologies, OTT strategy, and interactive TV systems. He builds scalable end-to-end video delivery solutions and drives technical innovation across hospitality and streaming platforms, bridging complex engineering with practical business impact.

What is OTT? Benefits and 5 Types of OTT Services

This article has all the answers to what is an OTT platform, how OTT works and the different types of OTT services

What is SVOD (Subscription Video on Demand)? 2026 Guide

What is SVOD, how does it work, and why are businesses turning to SVOD platforms to create new revenue streams? This article has all the answers.

Apple TV vs Roku – Compare Top Streaming Devices

Explore the differences between Apple TV and Roku. Compare features and performance to choose the best streaming device for your needs. Read more!